pile of thoughts: Indie Megabooth, Death in July, photon cloud, Juiciness, Internet Simulator 2014

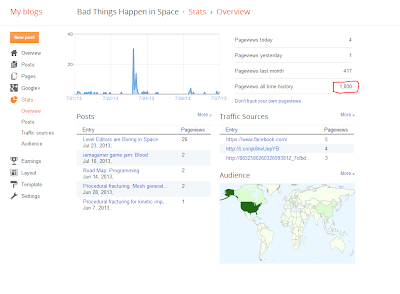

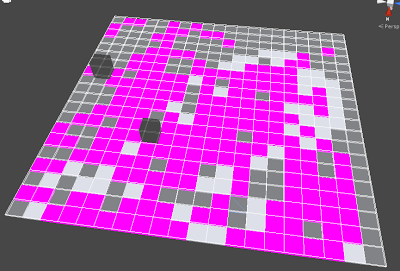

Ok! video games! Let's get started. I will be posting today about a pile of thoughts. I have resumed development on Bad Things, and they are progressing!!! I have refactored the item system into something that is non-ultra-terrible. I guess I really just wanted multiple inheritance in C# but no taco. And now I am focusing on getting multiplayer working again. Multiplayer stuff is hard. The Indie Megabooth I have submitted ('submote') Bad Things Happen in Space to the Indie Megabooth for PAX East 2014!! Yay! In accordance with this venerable tradition, I will now work furiously on getting a fairly fully featured multiplayer build ready for PAX. I know that getting into the megabooth is nowhere near guaranteed, but I still want to have a game to show off in the case that I do make the cut. Also, ungiving up has something to do with dying in July. The megabooth is more than a sweet way to show off your game at PAX; it's a great community of people who are all working