Procedural fracturing: Mesh generation and physics

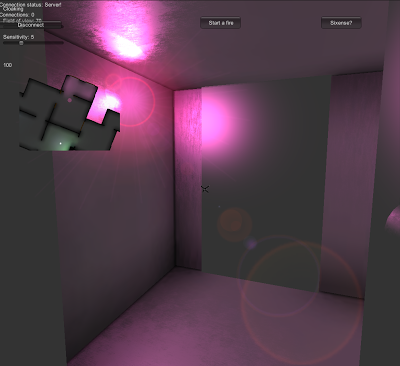

This will probably be the last post on procedural fracturing. Why? Because it's mostly done! In a few hours of heroic brain effort, I went from this: Lines are drawn between centroids and vertices in this image. There's also an axis pointing right is there for debug purposes To this: A fully procedural mesh! The little things After so much brain destruction over this, there were two bugs: one in finding the center of each circumcircle (I was dividing by zero somewhere, getting secret NaN s), and another in the centroid sorting algorithm. I also facepalmed really hard because the first sentence on the wiki page for polygon triangulation is "A convex polygon is trivial to triangulate in linear time , by adding diagonals from one vertex to all other vertices." Oops. I threw out the copy/paste ear clipping algorithm and just wrote my own thing to add diagonals. It's funny how something can seem so simple and obvious in retrospect. The only downside to